Shortlist der besten Einreichungen 2024

Der Preis für technologische Spitzenleistungen auf dem Gebiet der industriellen Bildverarbeitung wird während der VISION verliehen.

Alle fünf Unternehmen werden ihre Innovation im Rahmen einer einstündigen Session der Industrial Vision Days am 9. Oktober vorstellen. Warren Clark, Verlagsleiter von Imaging and Machine Vision Europe, dem Sponsor des mit 3.000 € dotierten Preises, wird die VISION Award Preisverleihung moderieren. Chris Yates (VISION VENTURES) wird als Mitglied der Jury am Ende die Laudatio halten und den Gesamtsieger küren.

Diese Unternehmen haben es in die engere Wahl für den VISION Award geschafft:

Abstracts

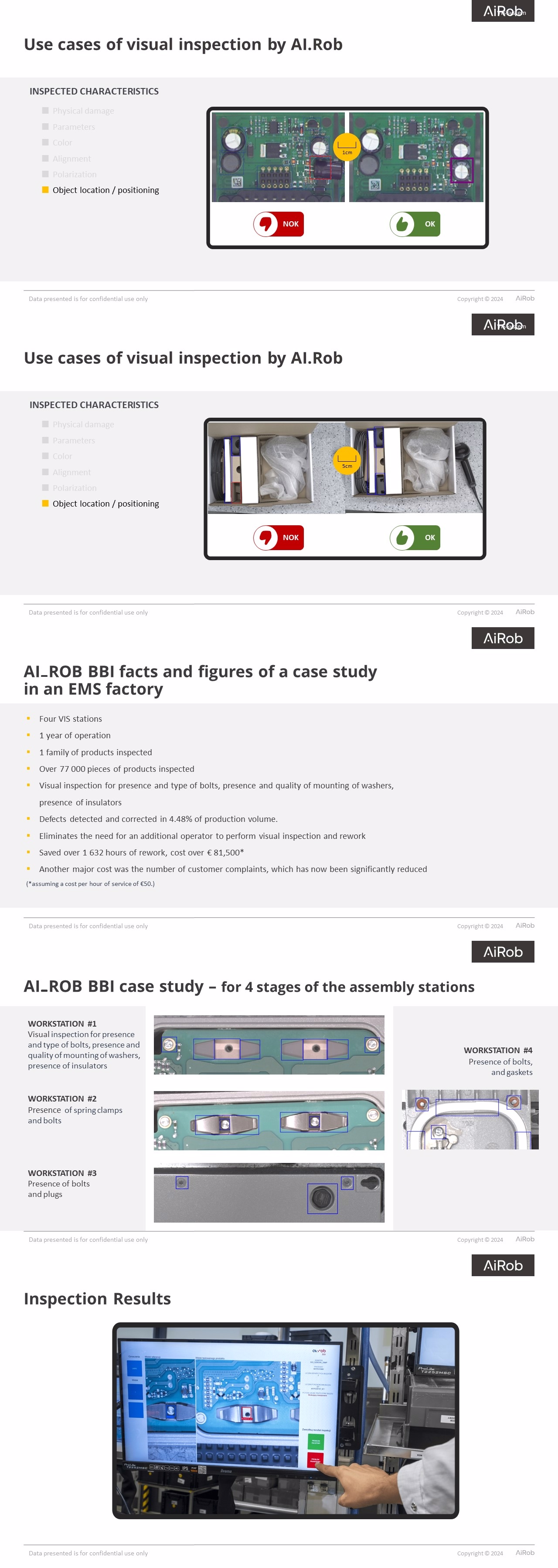

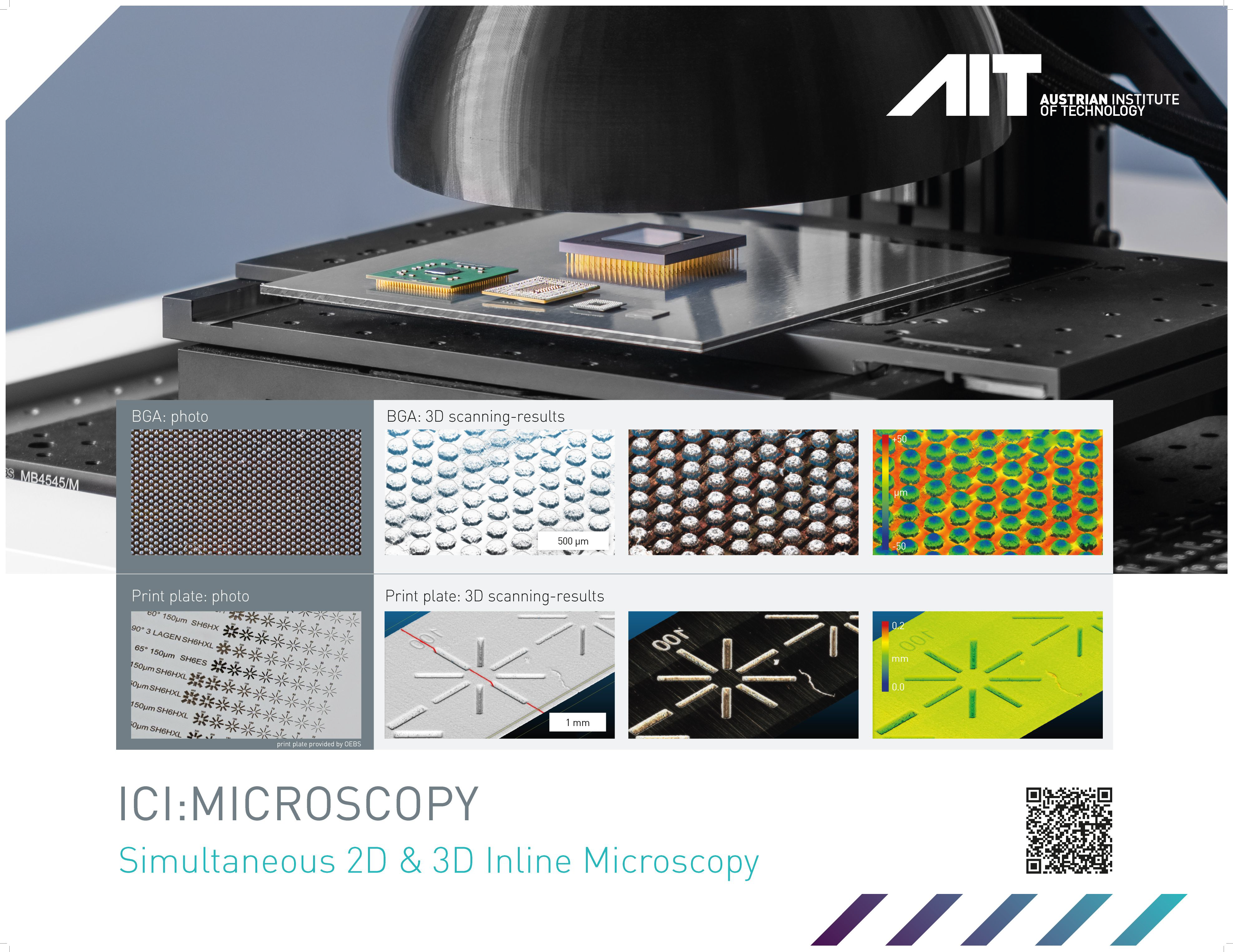

- AiRob: VIS Expert - Integrated Quality Assurance System Electronic Component Assembly

- AIT: 100% Inline Combined 2D/3D Microscopy

- Murrelektronik: Xelity Hybrid Switch - Bye-bye control cabinet!

- PxE Holographic Imaging: The Next Chapter in Digital Imaging

- Toshiba Teli corporation: Revolutionizing Surface Flaw Detection on Glossy and Flat Materials

by Dariusz Hebisz, CEO

Description of the innovation

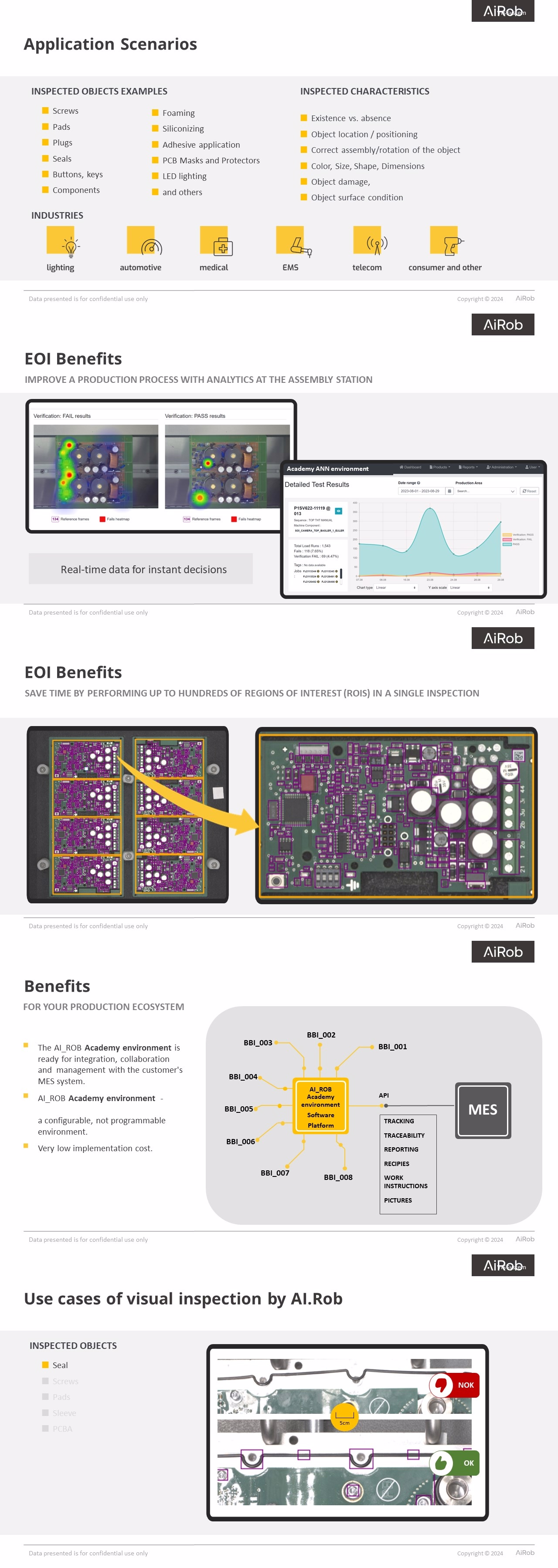

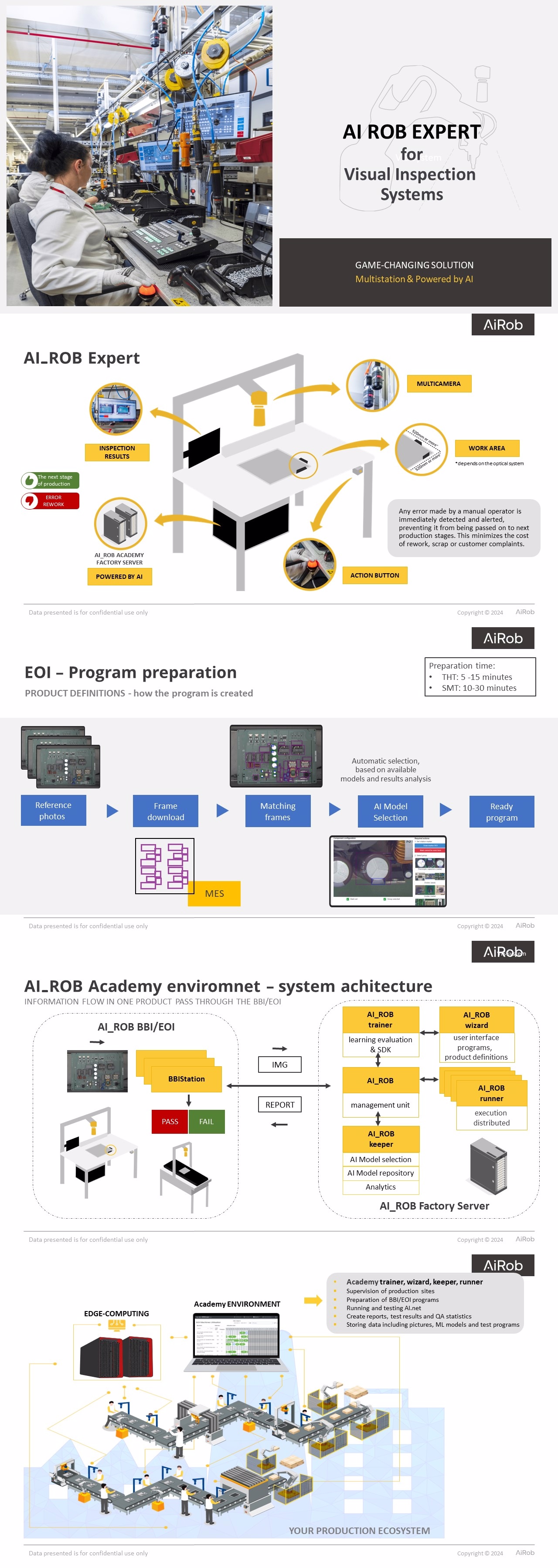

VIS Expert is an integrated quality assurance system for electronic THT (Through-Hole Technology) component assembly, designed to enhance the quality of PCBA (Printed Circuit Board Assembly) production and electronic products while reducing costs. This system leverages cutting-edge vision technology supported by AI within a unified ecosystem, providing real-time defect detection and analysis to improve production efficiency and product reliability. The system supports continuous process improvement through detailed analytical data and facilitates rapid deployment and scalability, making it a versatile solution for dynamic production environments.

- Integrated Ecosystem: Our system consists of a central server and a network of cameras located at key points in production. This makes it possible to monitor the production process in real time and detect any defects immediately. All VIS Expert stations are connected within a single intelligent ecosystem based on a central factory server. This allows for real-time exchange of algorithms, programs & procedures, product specifications and categories, visual metrics, and software updates.

- Instant Knowledge Transfer: Any new VIS Expert station added to the ecosystem can immediately absorb the necessary knowledge for efficient operation on the production floor.

- AI Neural Networks: AI neural networks trained on one VIS Expert station can be shared with others, creating a "robot university" known as AI.Rob Academy.

Technical details and advantages of the innovation

VIS Expert's technical foundation includes high-resolution cameras, advanced AI-supported software for image analysis, and a central data management server. Key technical features and advantages include:

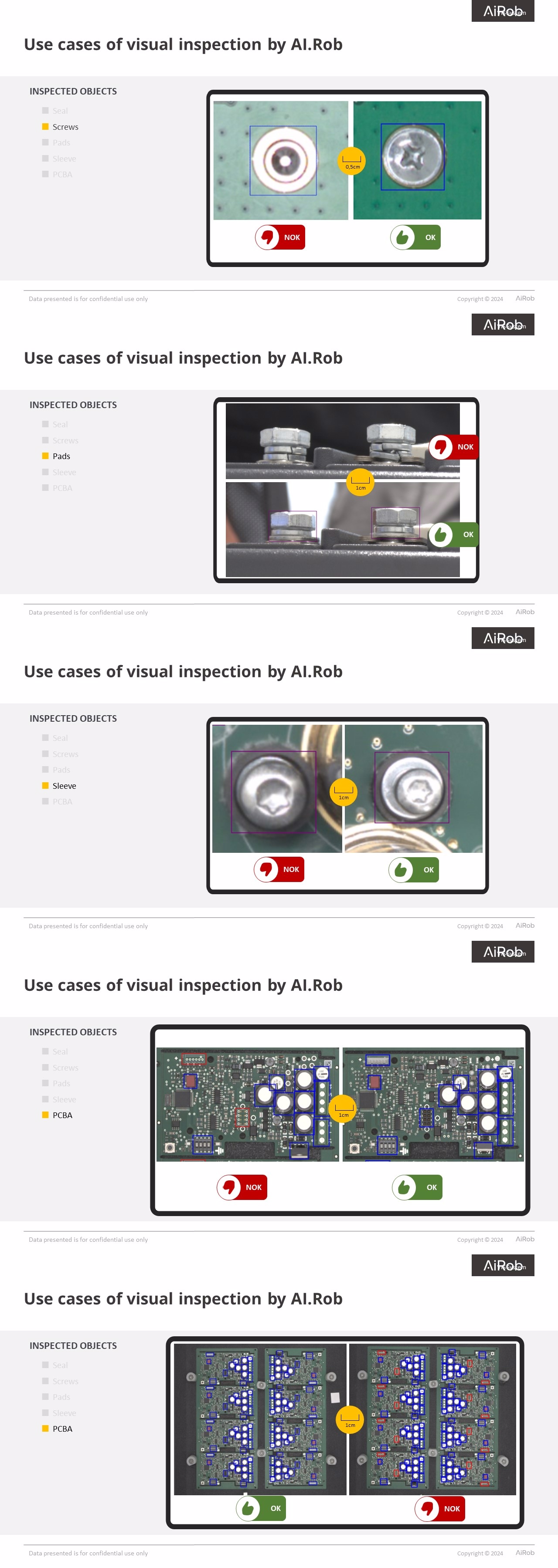

- Real-Time Defect Detection: Utilizes AI image processing algorithms to achieve 99.99% accuracy in identifying defects, with up to 1000 regions of interest (ROI) checked per image during a single test.

- Neural Network Flexibility: AI neural networks are tested and retrained on millions of components, enabling detection of errors such as :

- MCD - Missing Component Detection: Generic network solving over 90% of standard tasks, Existence vs. Absence.

- RCD - Reverse Component Detection: Correct assembly/rotation of objects such as Connectors, Capacitors, Pins, Solenoids, and more.

- ICD - Invalid Component Detection: Object location/positioning, Color, Size, Shape, Dimensions, Object damage, Object surface condition, and others.

- Module for Creating Dedicated Neural Networks: Allows customers to develop their specific networks.

- Instant Knowledge Transfer: New VIS Expert stations can immediately absorb operational knowledge from the ecosystem, ensuring efficient functionality.

- Rapid Installation: Setup involves simply installing a camera and downloading data from the cloud or factory server, making the system ready for use within minutes.

- MES Integration: Seamlessly integrates with Manufacturing Execution Systems (MES) for enhanced production management.

- Production Line Integration: Can be installed above production lines and integrated with transport systems to automatically redirect non-conforming products.

- Adaptive Algorithms: Capable of adjusting to changing lighting conditions, perspectives, and backgrounds using advanced algorithms for autoexposure, white balance, and focus control.

Installation Process

The system is easy to configure and does not require advanced programming knowledge, allowing users to quickly implement the system and start benefiting from it.

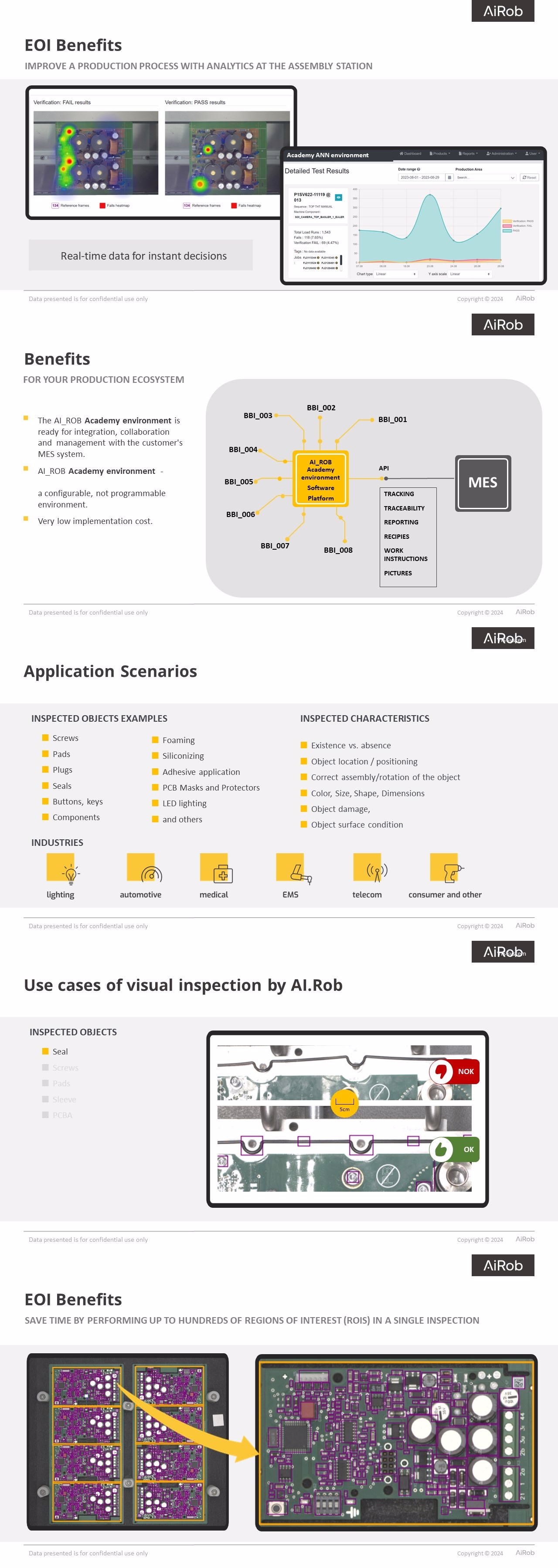

Additionally, the system generates in real-time detailed reports and statistics on all detected errors, including their frequency and distribution. These are displayed in graphical formats, such as charts and heat maps, highlighting areas requiring the most attention. The system also supports analyses per workstation or employee to facilitate Lean implementation. It instantly notifies management of serial error occurrences, allowing for immediate corrective actions.

Furthermore, VIS Expert can employ a multi-camera setup, where cameras are positioned not only from above but also from multiple angles, enabling faster inspections without the need for operators to rotate the object. Current developments include tracking operators' hands in real-time to ensure they pick components from the correct location and place them accurately. The neural networks excel in accommodating left-handed, right-handed, and ambidextrous operators.

Relevance and application possibilities of the described innovation for the machine vision industry

VIS Expert is highly relevant to the machine vision industry as it showcases the potential of AI-enhanced vision systems in industrial applications. Its ability to provide real-time, high-accuracy defect detection makes it invaluable for quality assurance in electronic manufacturing. The system's modularity and scalability allow it to be easily adapted to various production environments, offering flexibility and efficiency.

- Quality Assurance: Ensures high-quality production standards by detecting and correcting errors in real-time, significantly reducing the incidence of defective products. Process Optimization: Detailed analytical data enables continuous improvement of manufacturing processes, identifying and addressing inefficiencies.

- Cost Efficiency: Reduces costs associated with defective products, rework, and returns, while minimizing the need for additional human resources.

- Scalability and Flexibility: Easily scalable to meet varying production demands without extensive reconfiguration, supporting both small-scale and large-scale operations like hundred of optical station on one factory server.

- Integration Capabilities: Enhances existing manufacturing setups through seamless integration with MES systems, contributing to a more streamlined and efficient production process.

VIS Expert is a proven solution already utilized by the largest Polish EMS company, ranked within the top 50 globally. Over the past five years, it has been refined and optimized on production lines, demonstrating its reliability and efficiency.

As a fully developed and commercially maintained product, it is readily available for installation in external companies. Unlike integrative systems, VIS Expert can be easily configured by individuals without advanced technical knowledge. This off-the-shelf system significantly simplifies implementation and operation, providing immediate benefits without the need for extensive customization.

Unique Selling Point (USP)

VIS Expert offers a configurable, non-programmable environment with real-time, high-accuracy AI defect detection, rapid deployment, and seamless MES integration, ensuring efficient, scalable, and cost-effective quality assurance for electronic THT component assembly, supporting both small-scale and large-scale operations like hundred of optical station on one factory server.

When will the innovation be available?

It is available to companies on the market from the beginning of 2024.

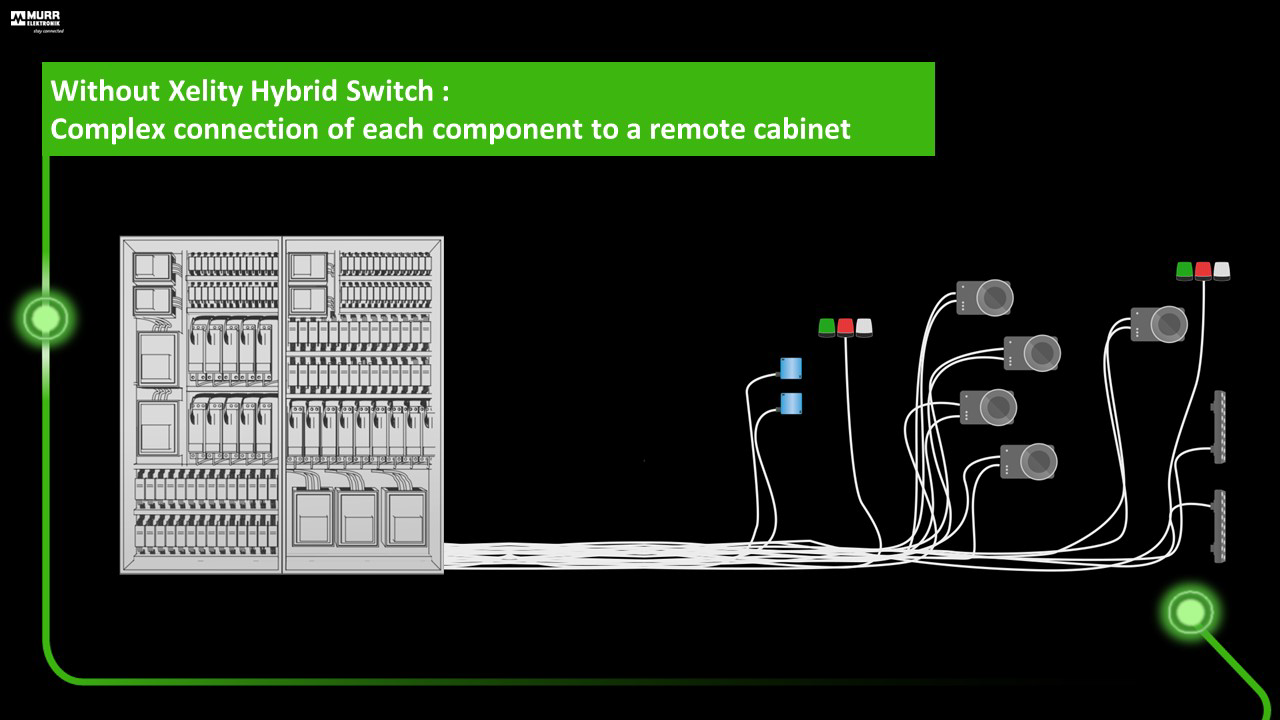

by Clabian Markus, Traxler Lukas, Thanner Petra

Description of the innovation

The AIT Austrian Institute of Technology presents a groundbreaking technology that, for the first time, enables combined 2D and 3D inspection in microscopy 100% inline. This innovation offers sampling rates of up to 700 nm and delivers up to 60 million registered 2D image pixels and 3D points per second.

The technology offers the following BENEFITS TO THE USER:

- FAST AND PRECISE QUALITY CONTROL: The technology combines 2D and 3D inspections in a single camera with standard optics. This allows comprehensive inspection of moving parts in real time.

- HIGH RESOLUTION AND ACCURACY: The technology achieves resolutions in the single-digit µm range (x/y/z), enabling precise inspections at the microscopic level.

- EFFICIENT USE OF PARALLAX EFFECTS: Instead of avoiding parallax effects, they are utilized to achieve fast and precise 3D depth reconstruction. Additionally, multiple captures from different viewing and lighting angles lead to better accuracy.

- VERSATILE APPLICATION POSSIBILITIES: Thanks to the multiple captures of the moving object and multiple time-triggered lighting modules, the system can be easily adapted to various industrial applications including a large variety and combination of dark and shiny matter without adaptation needs.

- HIGH SCANNING SPEEDS: Ideal for continuous process lines in the industry, the system achieves scanning speeds of up to 12 mm/s with a depth resolution of 2.8 µm and a lateral sampling of 700 nm/pixel.

AIT's ici:microscopy is a revolutionary technology for high-speed inline 3D microscopy, offering the highest precision and efficiency in quality control. It is particularly suitable for high-quality manufacturing industries and industrial processes where continuous movements occur in the process line.

Technical details and advantages of the innovation

Previous technologies for 2D and 3D inspection in microscopy were often separate systems that either provided only 2D images or 3D scans. Examples of such systems include conventional 2D camera systems and specialized 3D laser scanners. These systems often required complex setups, special optics, and time-consuming processes to achieve accurate results. Typical systems such as confocal microscopes or stereo microscopes achieved only limited sampling rates and struggled to provide both high resolution and measurement speed.

The new technology from the AIT Austrian Institute of Technology overcomes these limitations by enabling seamless, combined 2D and 3D inspection inline. With sampling rates of up to 700 nm and the ability to deliver up to 60 million registered 2D image pixels and 3D points per second, this innovation offers significantly higher efficiency and precision.

SPECIFIC DIFFERENCES

1. SPEED: Conventional confocal microscopes and 3D laser scanners often require multiple passes to capture complete data. The AIT technology achieves this in real time with 60 million 2D and 3D points per second.

2. INTEGRATION: While other systems require separate devices for 2D and 3D inspections, the AIT technology combines both in a single device, leading to simpler installation and use.

3. RESOLUTION: The new technology offers an extremely high resolution of up to 700 nm, in contrast to many conventional systems that often provide lower resolution at faster processes.

4. FLEXIBILITY: Conventional systems are often material-dependent and require special settings or calibrations for different surfaces. The AIT technology is largely independent of the surface properties of the material to be inspected.

These advances allow the inspection of moving parts in real time, resulting in significant improvements in quality control and productivity in industrial applications.

COMPARISON WITH OTHER TECHNOLOGIES

1. WHITE LIGHT INTERFEROMETRY: While these systems offer high depth resolution, their long acquisition times and the need for

point-to-point scanning make them unsuitable for in-line applications. In contrast, ICI enables rapid acquisition at high resolution, making it ideal for continuous production processes.

2. LASER LINE TRIANGULATION: Laser line triangulation systems are often limited by the camera's frame rate and the smallest achievable

line focus, which restricts their resolution and acquisition speed. ici:microscopy offers superior lateral sampling of 700 nm per pixel and high scan speed, overcoming these limitations. Additionally, they lack high quality 2D colour information, which ici:microscopy provides pixel-registered with the 3D point cloud.

3. DEPTH FROM FOCUS (DfF) and CONFOCAL MICROSCOPY: Both methods are unsuitable for inspecting moving objects as they require multiple images with different focus positions, leading to inefficient use of data acquisition bandwidth. In contrast, ICI captures and processes data in real-time, which is crucial for industrial inline applications.

Relevance and application possibilities of the described innovation for the machine vision industry

ici: microscopy from the AIT Austrian Institute of Technology represents a significant advancement in the image processing industry by enabling simultaneous 2D and 3D inline inspection for micro-sized features and defects. This innovation is particularly relevant and beneficial for several reasons:

- INCREASED EFFICIENCY: By combining 2D and 3D inspection in a single device, the technology reduces the need for multiple inspection systems. This saves space, reduces complexity, and lowers costs for companies.

- ENHANCED PRECISION: With sampling rates down to 700 nm and the ability to deliver up to 60 million registered 2D image pixels and 3D points per second, the technology offers unparalleled accuracy. This is particularly important for quality control in high-precision industries such as semiconductor manufacturing, medical technology, and microelectronics.

- IMPROVED REAL-TIME MONITORING: The ability to inspect moving parts in real time allows companies to respond immediately to production errors. This minimizes scrap and rework, resulting in more efficient production.

- VERSATILE APPLICATION POSSIBILITIES: The technology is independent of the surface properties of the materials under inspection and can be used in a variety of industrial applications, from wire inspection to print image inspection, electronics and surface inspection.

- EASY INTEGRATION: Thanks to its compact and user-friendly design, ici: microscopy can be easily integrated into existing production lines. This allows companies to expand their inspection capabilities without extensive modifications.

- FUTURE-PROOF: By using the latest sensor technology and algorithms, the technology ensures that companies stay at the forefront of technological advancements and are prepared for future challenges.

In summary, ici: microscopy offers a powerful solution to the image processing industry that significantly improves both the efficiency and accuracy of quality control, thereby making a significant contribution to the optimisation of industrial processes.

Application fields

ici: microscopy can be used in a variety of production-related applications. Here are a few examples:

- Semiconductor Manufacturing:

- Inspection of wafers for defects and impurities.

- Checking the structure and thickness of semiconductor layers.

- Electronics Manufacturing:

- Inspection of printed circuit boards (PCBs) for faulty solder joints, cracks, and connections.

- Verifying the placement and quality of components on the board.

- Automotive Industry:

- Inspection of engine and transmission components for surface defects and dimensional accuracy.

- Examination of welds and adhesive joints.

- Medical Technology:

- Inspection of medical implants and instruments for dimensional accuracy and surface quality.

- Examination of microfluidic chips and other medical devices.

- Packaging Industry:

- Inspection of packaging materials for defects and irregularities.

- Checking labels and prints for correct placement and quality.

- Pharmaceutical Industry:

- Inspection of tablets and capsules for shape, size, and surface defects.

- Checking vials and ampoules for cracks and contaminants.

- Plastics Industry:

- Inspection of injection-moulded parts for dimensional accuracy and surface quality.

- Examination of plastic films for thickness and uniformity.

- Printing Industry:

- Inspection of printed products for colour fidelity, resolution, and surface quality.

- Verification of security features in printed documents.

All these applications and many more will benefit from ici:microscopy, the new high-speed industrial 2D & 3D microscope. ici:microscopy's high resolution, high speed acquisition and simultaneous pixel rectified 2D images and 3D models enable precise and fast quality control directly in the production line.

Unique Selling Point (USP)

AIT's ici:microscopy is an innovative solution for inline 3D microscopy. With its ability to quickly and accurately reconstruct 3D models at high resolution and speed, it offers significant advantages over traditional systems. This technology enables more efficient and accurate quality control in industrial production, leading to improved product quality and reduced failure rates.

When will the innovation be available?

already available

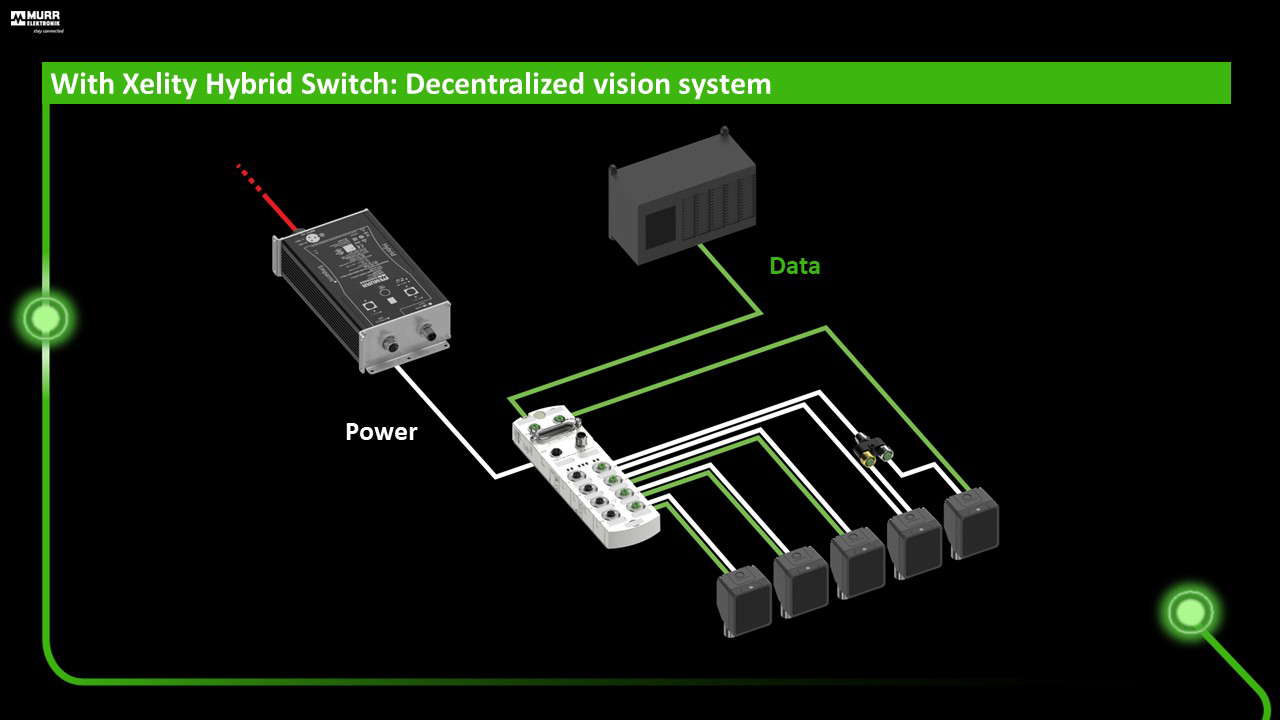

by Simon Knapp

Description of the innovation

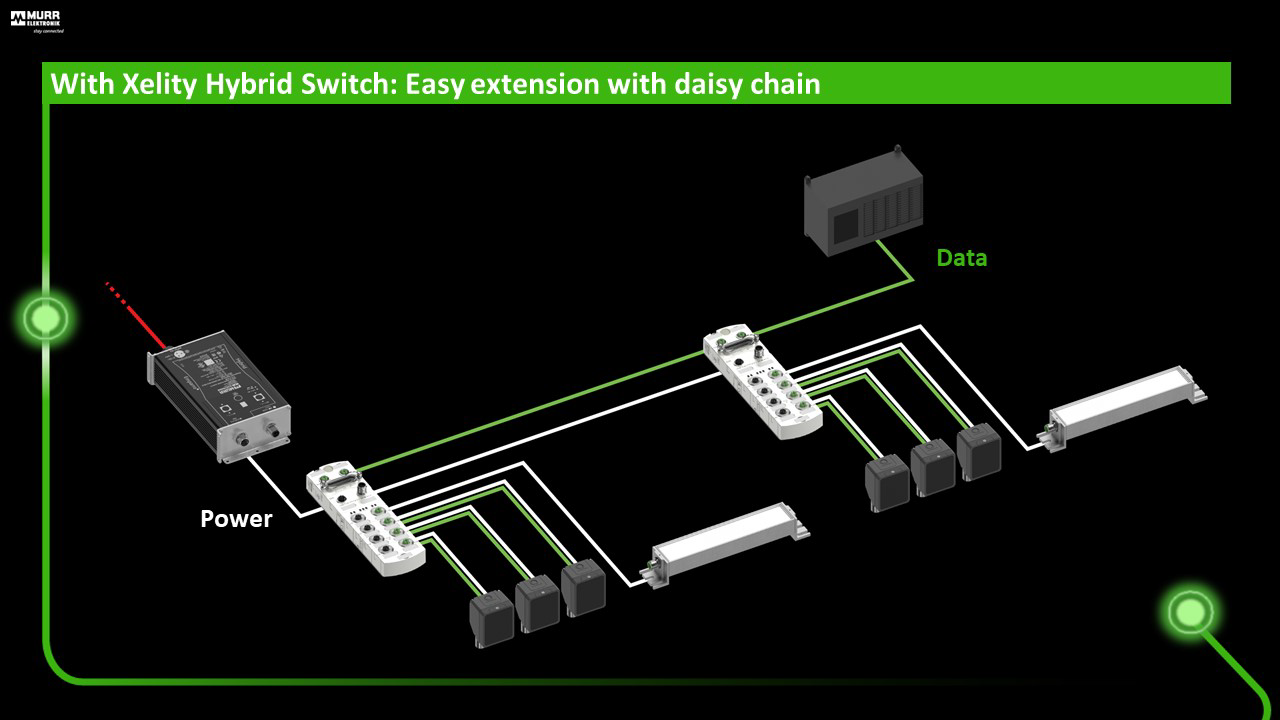

Murrelektronik revolutionizes the connectivity and networking of machine vision systems with the novel Xelity Hybrid Switch. Xelity Hybrid Switch acts as a local hub for the power, data, and trigger signals of a vision system. It allows system designers to avoid complex and costly long-range connections to control cabinets on the factory floor. Cameras and peripherals such as lighting, sensors etc. are all connected to a small, ruggedized switch located at the inspection cell.

The hybrid switch distributes both power and data to all components of the vision system. It can power and trigger up to four cameras simultaneously or cameras and strobe lights.

The system is highly scalable thanks to daisy chain connectivity: multiple switches can be connected to control even more components within a system and operate them simultaneously.

Thanks to Xelity Hybrid Switch, each vision system becomes an autonomous island connected to the factory network with just one cable. This makes it very easy to move it along the production line, expand it or duplicate it to adapt to changing manufacturing processes.

The simplification of connectivity also means that risks of failure are dramatically reduced as all components that work together are connected to each other locally. Maintenance and troubleshooting are also easier thanks to the built-in diagnostics LEDs. Operators on the line can immediately see if a connection is not working properly. Further diagnostics details are available via the web server.

Xelity Hybrid Switch is highly standardized and relies on industrial-grade M12 connectors. Its simplicity makes it very easy for staff with little expertise to perform simple tasks such as replacing or adding a camera or a light. Using standard technology also means full compatibility with any component, regardless of their brand.

Murrelektronik's Xelity Hybrid Switches save costs by saving kilometers of cable and speeding up installation and maintenance time. They make vision systems modular, scalable, and easy to adapt to changing manufacturing environments. Finally, their ease of use means that even staff with lower skills can operate them. This new networking technology brings machine vision to the next level of flexibility for the smart factory of the future.

Technical details and advantages of the innovation

Xelity Hybrid Switch is a rugged, industrial-grade connectivity hub for power, data and trigger in a machine vision system. It is IP67 compliant, resistant to shocks and vibrations, and withstands operating temperatures from -40°C to +55°C. All connectors are screw-locked M12 connectors. This makes it highly versatile for demanding industrial environments.

Xelity Hybrid Switch supports Gigabit Ethernet, the most common camera interface technology in the machine vision industry. The switch support advanced network management with Jumbo Frames, NAT, NAC or QoS. One device features four data four 4A NEC class 2 power ports, which means that up to four cameras can be operated with one switch.

Several devices can be connected to each other as a daisy chain, which opens endless possibilities to expand the network of cameras and other peripherals. One signal such as a trigger can be broadcast to all devices connected to a switch or even all devices in the daisy chain for perfect synchronization. One switch only needs a power supply and a network connection, which means a whole system is connected to the factory network with just one cable, even if it includes several switches in a daisy chain.

The decentralized approach of Xelity Hybrid Switch makes maintenance and troubleshooting easy: All the components of a vision system are interconnected locally. This makes it very easy to exchange a camera or add a strobe on site, without having to figure out connections in a remote cabinet. As a result, operators on the production line can perform simple maintenance tasks faster and without the need for expert personnel. LEDs on the device itself provide feedback on the correct operation of each port while the online diagnostics tools allow for more in-depth troubleshooting.

Relevance and application possibilities of the described innovation for the machine vision industry

Machine vision is everywhere in today's smart factories to ensure efficiency, quality assurance and safety.

At the same time, modern manufacturing must adapt quickly to changes in the manufacturing process and/or in the product to be manufactured. This requires flexible, modular and scalable vision systems. Finally, the lack of skilled staff means automation equipment and vision systems must be plug-and-play and easy to operate and maintain.

Murrelektronik's hybrid switches and decentralized approach are the answer to these challenges when it comes to connectivity. Having local, self-managed systems only connected to a central server via one cable makes it much easier for manufacturers to modify their processes and deploy their vision systems.

For system integrators, using hybrid switches speeds up development and installation as the whole system can be designed independently to the factory network and just docked into it with one cable.

- Use case 1: Upgrade of a machine with machine vision

Using a Xelity Hybrid Switch, upgrading an existing machine or production line with a vision system is easy, as it no longer requires accommodating all the new components in an already crowded control cabinet. Just connect all the components to each other locally and connect the switch with one cable to the cabinet. - Use case 2: Easy extension of an existing vision system

A simple inspection with one camera and a strobe can be built with one switch and later extend by a second camera with its strobe, all triggered simultaneously. Complex, multi-camera inspection systems are also possible with multiple switches connected as a daisy chain. - Use case 3: Reduce cabling in robotic applications

A vision-guided robot can be fitted with several cameras for 3D vision with one small hybrid switch providing power, trigger signals and data connection to all cameras within minimal space requirements inside the cell.

Unique Selling Point (USP)

Murrelektronik's Xelity Hybrid Switch is a paradigm change in machine vision. It turns the vision system into a decentralized sub-network that can easily be scaled up or down, maintained and operated locally. The times of cluttered control cabinets connected with kilometers of cables are over. This makes machine vision systems easier and faster to setup, more flexible, scalable and cost-effective.

When will the innovation be available?

Since April 2024

Description of the innovation

We're all familiar with cameras; they have quickly become an integral part of our lives, whether it's to document life's moments on our phones, unlock our devices hands-free, keep our homes safe from intruders, or assist us while driving. And though we've made great strides in imaging techniques over the decades, the fundamental physics of digital cameras have remained quite constant, and are completely analogous to film cameras.

At the heart of any camera is the lens, which focuses rays of light to form an image on the image plane. In the past, a light-sensitive film was placed on the image plane to record the intensity of light and color of the image formed by the lens. This film was then chemically processed to enable recording and reproduction of the captured image.

Digital cameras operate in precisely the same way, the only difference being in the light-recording medium. The core of a digital camera is a semiconductor device (such as a CMOS sensor), which consists of an array of light-sensitive photodiodes that convert photons that reach the image plane into electrons. Each pixel accumulates an electrical charge proportional to the intensity of the light it receives, and the charge is then converted to a digital value. To obtain a color image, the CMOS sensor uses different filters to measure the light intensity and color. This is often a Bayer filter, which consists of a mosaic pattern of red, green, and blue filters on the individual pixels. During this process, each pixel on the

sensor captures one color channel of light, depending on the filter directly above it (red, green or blue).

Next, the camera's image processor takes the digital values from the sensor, processes them, and createsa full-color image. Post-processing can also be applied for color adjustment, noise reduction, and sharpening.

The method described above (whether for film or digital) is made possible through the treatment of light as rays, a fundamental premise of optical imaging. This treatment, also called raytracing, is used to design camera lenses, and is at the core of classical digital image processing, and even computer graphics rendering.

While digital cameras can capture high resolution images, they have inherent limitations. One important limitation is that conventional cameras can not capture 3D information. Indeed, today's cameras can only capture two dimensional images. As one can imagine, scientists have tried crack this, as far back as 1908.

The first was French scientist, Gabriel Lippmann, who invented light-field imaging with promises to achieve the goal of 3D imaging on a single camera. It wasn't until 20 years ago that a team in Stanford truly implemented such a camera using light-field imaging. Light-field imaging captures and records the propagation paths of light-rays through space as they enter the camera. This light-field information records each light-ray's position and direction. Once recorded, this information can be used to perform digital raytracing (just like in computer graphics rendering) but in reverse - from the camera back out to the real world, and thus calculate the distance to every point in the imaged scene (i.e. three-dimensional maps). Additionally, it can be used to digitally refocus rays, and correct optical distortions and aberrations. In other words, light-field imaging promised to unlock 3D optical imaging capabilities and be the holy grail for digital image processing.

However, once this technology was put into practice, its severe limitations and compromises became apparent. The main problem is that conventional light-field imaging contains an inherent trade-off between the light-rays' angular information and the overall image resolution. This causes light-field images to have very low resolution compared to conventional images taken with the same imaging optics (main lens). It also implies that it is virtually impossible to shrink the size of the imaging optics without severely degrading performance. Another drawback is the very high algorithmic load required to perform digital raytracing for full 3D reconstruction and refocusing/deblurring. Thus, light-field imaging failed to gain any significant traction in the consumer space where small form-factor, high image quality and lean processors are paramount, nor in other applications such as microscopy and machine vision where high-resolution is required.

The reduced resolution is due to light-field's treatment of light as geometric rays. On the other hand, quantum mechanics also has the principle of duality, treating matter both as a particle and as a wave. In general, this duality can allow us to have our cake and it eat too - we can gain new additional information through the light's wavefront phase (which is the wave analogue to a ray's direction) whilst still retaining maximal optical resolution.

Though treating light as waves, holography, is not an entirely new concept, it has generally involved lasers. The cost of such a system has been prohibitive for this technology in the market. This is where PxE comes in.

PxE's founders wanted to harness the principle of duality (treating light as both a particle and a wave), but they also understood that lasers were not practical for a scalable camera solution. After extensive research and numerous design iterations, PxE was able to build holographic optics that work with ambient white light as opposed to lasers and thereby make the technology useful in real life. Using white light holograms, PxE can extract color and infrared from the light's wavelength, the depth information from the curvature of the wavefront, and blurring effects from the distortion of the wavefront.

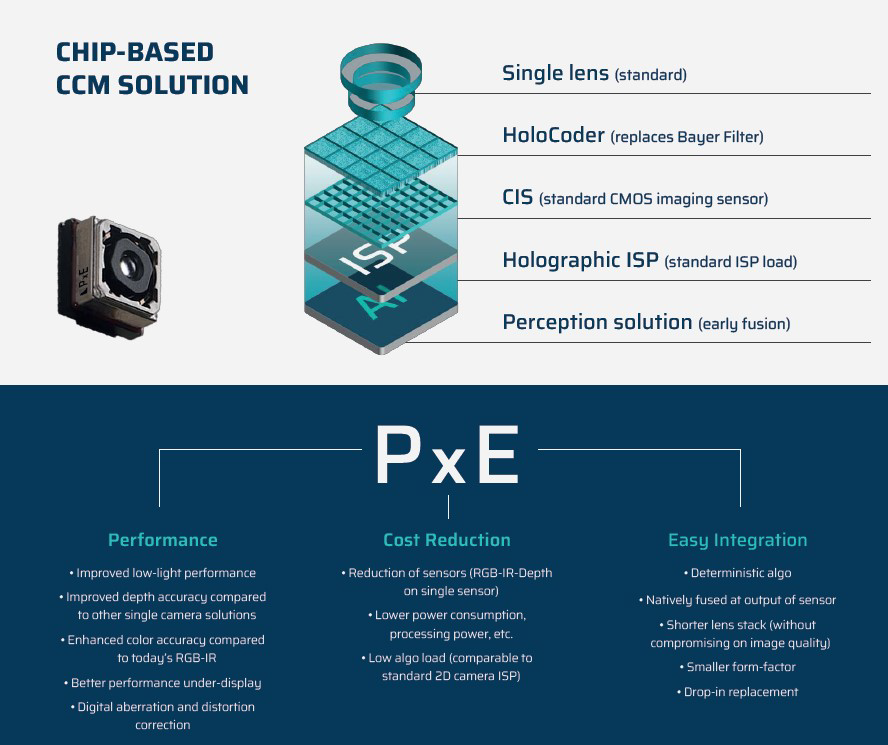

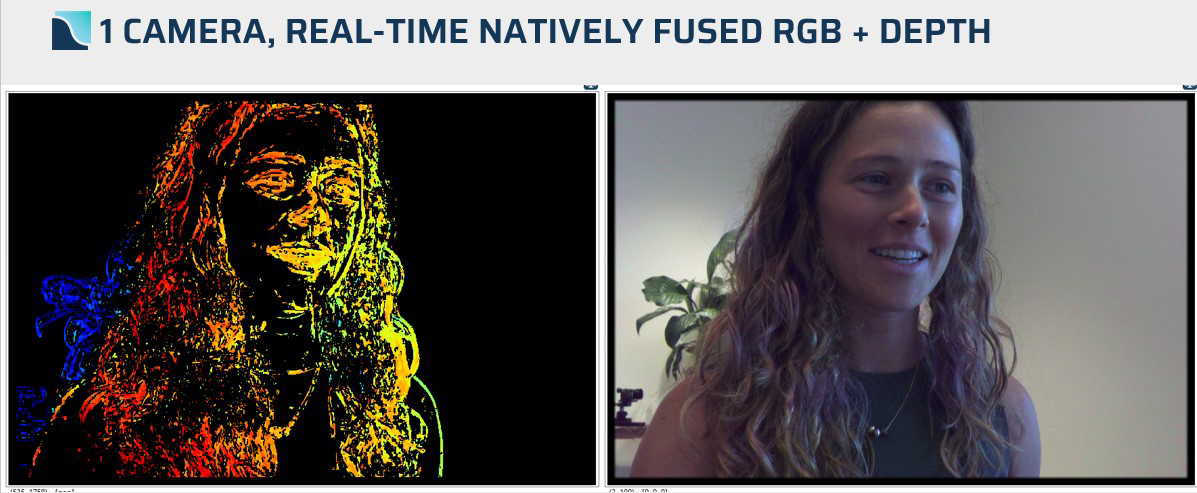

In doing so, PxE's camera overcomes the limitations of light-field imaging, while maintaining the same fundamental structure of a camera (i.e.. lens, CMOS and a filter, albeit a holographic one). The result? A camera that can reproduce color, infrared, and depth images, with high resolution and at a consumer price point.

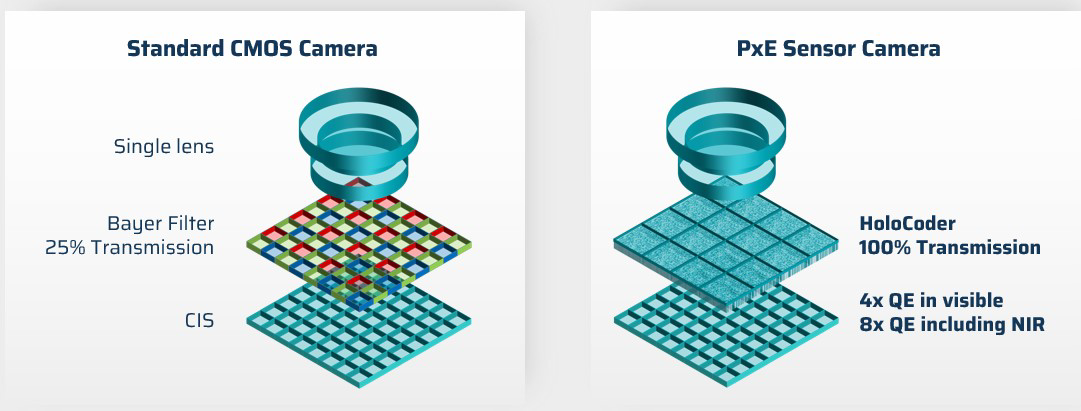

An added benefit of PxE's method is the quality of images in low-light scenarios compared to those generated through the Bayer filter. Since the Bayer filter only captures one channel of light (either red, green or blue) in each pixel, it necessarily means that 2/3 of the incoming light is discarded in each pixel. In actuality, the Bayer filter only transfers 25% of the available light to the sensor. As a result, taking images in very low-light environments will result in very poor, dark images. On the contrary, PxE's solution does not require filtering in the same way and thus transfers 100% of the available light to the sensor. This produces high quality images even in ultra low-light environments.

PxE's solution

PxE's innovative technology is a combination of hardware and software, implemented on a standard CMOS image sensor and a standard camera ISP.

Standard digital cameras are comprised of a CMOS sensor with a Bayer filter and a lens. To implement PxE's holographic imaging, the Bayer filter is replaced with a layer of holographic optics, PxE's HoloCoder. Instead of red, blue and green being filtered by the Bayer filter, the HoloCoder produces a white light hologram, consisting of the wavefront information of the light. The captured holograms are decoded in real-time by PxE's proprietary, fully deterministic algorithm and then output as natively fused RGB-IR-Depth (color, infrared and 3D) channels. In addition to the multi-channel output, the camera's color image will have 4x the light sensitivity as compared to standard cameras due to the transmission of 100% through the HoloCoder, as opposed to 25% of the light when filtered by the Bayer filter.

PxE's technology is agnostic to the underlying hardware, and can therefore be implemented on any sensor, any camera, and with any lens. Furthermore, the decoding algorithms have a computational footprint similar to a standard camera ISP and thus do not require additional processors. In other words, all existing digital imaging sensors and cameras can be enhanced with RGB-IR-Depth channels plus ultra low-light sensitivity, without any additional cost or compromises on image resolution or quality.

Summary

With over 20 patents granted and pending, spanning from the hardware of the HoloCoder, to the algorithms of decoding white light holograms, PxE's solution is perhaps the first meaningful transformation in the imaging market since the invention of the digital camera. The technology reinvents the basic premise of capturing images - no longer a digital analogue to film cameras, but rather a new way to see the world and perceive much more information than ever before. It is the world's first camera to capture RGB, IR, and Depth without degrading image resolution, and works in any illumination condition without compromising on performance.

PxE's innovation has the potential to retrofit and replace all existing 2D cameras on the market - changing the foundation of digital cameras from 2D to 3D and beyond.

Technical details and advantages of the innovation

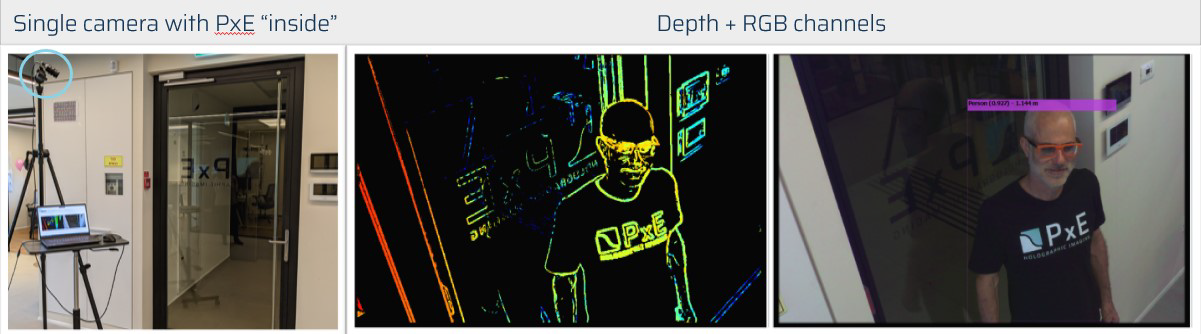

PxE's innovation is the ability to output color, infrared and depth images all from a single sensor, and in any illumination condition, without degradation of image quality. Thus, reducing the cost and complexity, while increasing the capabilities of any standard camera on the market today. PxE does this by treating light as waves (holography) as compared to treating light as rays in standard imaging technology. By doing so, PxE cameras can do much more than traditional cameras, such as: extract 3D, color and infrared, generate high quality images in low-lighting conditions, deblur images, do digital aberration correction, be placed behind display screens, and have a shorter lens track without degradation of

images.

As in all cameras, PxE's solution consists of a standard lens, a standard CMOS imaging sensor with a filtering element, and a standard image signal processor (ISP). In standard cameras, the filtering element consists of a microlens array and a Bayer filter, integrated onto the CMOS imaging sensor in wafer post-processing. Bayer filters are simple, cost-effective to manufacture and provide good color reproduction for most everyday needs. However, it has many limitations. For example, the Bayer filter discards about 75% of the incoming light and therefore, image sharpness and color accuracy suffer, especially in low-light conditions or noisy environments. Further, the Bayer filter is designed only for the visible spectrum and is unable to produce infrared or 3D images. Contrastingly, PxE has designed a diffractive element, the HoloCoder, which replaces the Bayer filter on the CMOS imaging sensor and enables a suite of enhancements. It is implemented as a passive, transparent dielectric phase mask using standard wafer post-processing techniques with minimal changes. This proprietary piece of hardware is designed to generate unique diffraction patterns that encode wavefront and spectral information, i.e. white-light holograms. The spectral information encodes color and IR and can even be used to perform multi-spectral imaging. The wavefront information can be used to infer depth based on the change in wavefront curvature as objects vary in distance from the camera. The resulting white-light hologram is captured by the standard CMOS sensor as an encoded black and white image, and PxE's holographic decoding algorithm then kicks in.

The decoding algorithm is based on an accurate model of optical wave propagation throughout the entire system - from the objects in the world, through the lens, the white-light hologram formation by the HoloCoder, and finally the raw image captured by the CMOS sensor. The algorithm is fully deterministic - there are no training sets, heuristics, machine learning or neural networks at the decoding stage.

Therefore, the output data - color image, IR and depth map, are free of artifacts and the decoding process is fully traceable - an important factor in safety-based applications, such as collision avoidance. The algorithm is lean, with the overall calculation load for extracting RGB-IR-Depth data from a raw hologram similar to the load of a standard image processing pipeline (ISP). This allows easy deployment to edge devices using the available compute power. Furthermore, the physics behind the HoloCoder allows for 100% transmission of available light, as compared to the Bayer Filter which only allows for 25% transmission. As such, the HoloCoder has 4x the light in visible, and 8x the light in near-IR, a big advantage in low-light scenarios. Another advantage is that all outputs, RGB, IR, and depth are obtained from the same raw hologram snapshot: natively fused, fully synchronized in time and space. This saves the significant effort required for system integrators for aligning, calibrating and synchronizing separate sensors for each channel, i.e. the individual RGB, IR, 3D sensors. It also enables building early-fusion neural networks which have greatly enhanced performance and lower inference loads compared to late-fusion networks that treat each information channel separately (as typically done today on standard systems).

Now, if we go back to the premise of using the light's waveform, we can also deblur images, which often arise because of imperfect optics. PxE can algorithmically compensate for wavefront distortions in conventional cameras, yielding sharp, perfect images. This premise is also useful to enable simpler lens design and to place cameras under display screens without degrading the image quality. These are all desired features for cost reduction, smaller form-factor and improved user experience, but cannot be accomplished today using standard imaging techniques.

In addition to the technological enhancements provided by PxE's technology, the use of a standard lens, standard CMOS sensor and standard ISP, enables an easy and cost-effective integration for manufacturers across industries.

Relevance and application possibilities of the described innovation for the machine vision industry

PxE's ability to capture multiple data-points, natively-fused at the output of the sensor enables a cost-effective, highly accurate and multi-functional infrastructure for machine visions systems. By providing a robust and low-cost backbone, high-quality machine vision systems can be implemented

across a host of industries and various price-points.

A superior backbone for machine vision

Image acquisition for full RGB-IR-Depth, enhanced sensitivity and deblurring data is obtained from a single snapshot of a PxE white-light holographic raw image. Our technology is compatible with both rolling and global shutter sensors, allowing seamless integration with existing data acquisition and processing flows, with no impact on throughput or scan methodology.

In addition, PxE is the only single-lens 3D imaging technology capable of obtaining diffraction-limited images, which is exceptionally critical for inspection and metrology applications. Typically, machine vision systems working at high magnification suffer from shallow depth of focus,

leading to compromised image quality or complicated and slow scanning procedures, such as z-stacking.

PxE's deblurring capabilities can be used to obtain extended depth of focus by 10x. This allows single-snapshot all-in-focus imaging, allowing greater flexibility and performance for imaging and inspecting objects with significant 3D topography.

Further, current systems are built upon the low-light limitations of 2D cameras. As such, vision systems in markets such as security, manufacturing, and robotics, will benefit from PxE's enhanced low-light performance, without the need for additional sensors.

The above advanced imaging capabilities, in conjunction with PxE's enhanced sensitivity, and early fusion RGB-IR-Depth data, will allow for much better systems with regards to object detection and recognition, quality control and inspection, and measurement and metrology.

When it comes to robots and drones, the single-camera form factor and low algo load and power consumption will allow for easy integration and scale of use. Further, PxE's fully synchronized RGB-IR-Depth data streams, and deterministic algo stack will allow for full traceability in mission-critical safety features such as obstacle avoidance and human-robot interaction.

Gesture and human-machine interactions will also benefit from the above features, while enjoying the elegant under-display installation options available using PxE cameras.

Lastly, PxE can design cameras with multi-spectral capabilities beyond RGB-IR for enhanced monitoring of plant health in agriculture, and quality control of agricultural produce.

Multi-channel at the cost of 2D

Machine vision applications are always a trade-off between performance and cost of ownership. Today, most machine vision applications are based on 2D cameras, whilst 3D solutions are used only for very critical applications. One of the driving factors is cost of ownership, where a 3D system is typically 10-50x costlier than the equivalent 2D system in terms of cost per pixels scanned. The high cost of ownership for 3D systems arises from their increased complexity since these solutions are based on integrating several sensors that need to be carefully synchronized and calibrated.

PxE's solution, being based on a single CMOS sensor, has a cost structure similar to a 2D camera, both in terms of complexity, parts count, and throughput. In addition, the form-factor is identical to a 2D camera, enabling a drop-in replacement of existing 2D sensor in any optical imaging system - from standard machine vision lenses to customized imaging and microscopy tools. There fore, any 2D system can be retrofitted with a PxE camera, yielding a 3D solution with the cost of ownership of a 2D system. This can even be done as a field-upgrade, allowing customers to leverage their existing installed base to obtain new capabilities for tomorrow's challenging machine vision requirements.

Take for example, the security market. Today, surveillance cameras typically us an RGB sensor for daylight, and switch to IR mode for increased sensitivity in night-time imaging. This is done with 2 sensors, or with a mechanically switched IR filter. PxE's RGB-IR capability can do it with greater sensitivity using a single sensor, whilst eliminating the mechanical IR filter switch.

In addition, many such applications require distance measurement, e.g. to monitor crowds, persons approaching access points, etc. PxE's sensor is the only solution that does that with the existing camera, without additional 3D sensors.

Lastly, a limitation for the augmented and virtual reality markets have been the number of sensors needed to satisfy the demands of this market. PxE's small form factor, low power, and single-shot RGB-IR-Depth capabilities allow for small form-factor, easy integration and full perception. This will be critical in realizing the potential of AR/VR and truly bringing it to the masses.

Unique Selling Point (USP)

Natively-fused outputs of RGB-IR-Depth on a single standard camera, without degrading imaging quality. Enhanced low light performance (4X current cameras), ability to deblur images, do digital aberration correction, place the lens behind highly curved or raked windshields and display screens, and have simplified lens stack without degradation of images. No additional cost compared to 2D cameras.

When will the innovation be available?

Demo units are already available

by Yutaka YAMAGUCHI

Description of the innovation

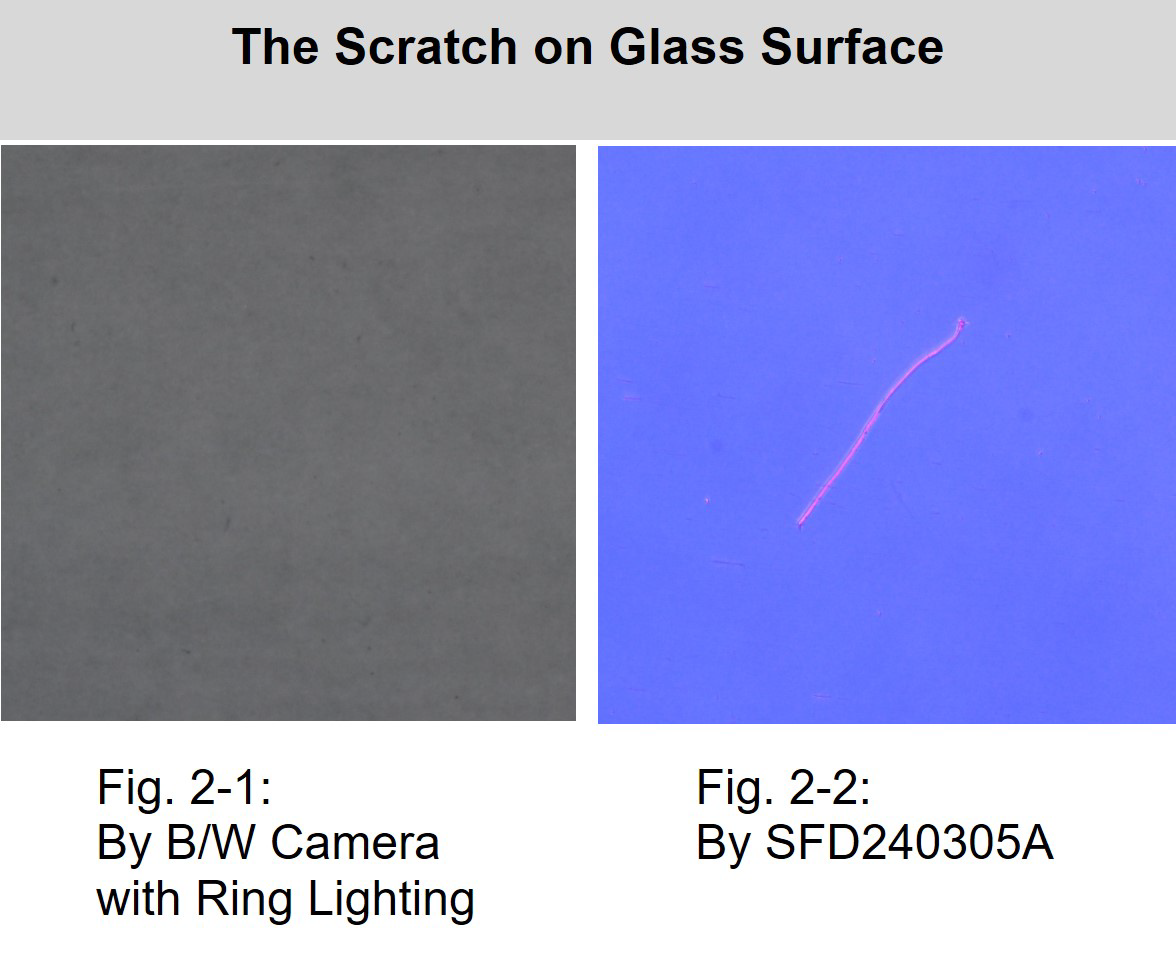

The "SFD240305A" Surface Flaw Detection Scope is an appearance inspection device that employs the "OneShotBRDF(TM)" technology to clearly detect defects such as scratches and flaws on the object using a special optical system, distinguishing them with color.

The device boasts the following features:

- Visualization of Micro-Scratches on Glossy Flat Surfaces

It enables the detection of micro-defects and scratches on glossy surfaces like mirrors, which were previously difficult to observe. - Visualization of Micro-Scratches on Transparent Object Flat Surfaces

It enables the detection of micro-defects and scratches on transparent object surface like glass, which were previously difficult to observe. - High-Speed Inspection with Multi-Wavelength Coaxial Aperture for Scattered Light Identification

By optically decomposing scattered light caused by defects into color information, it can instantly produce clear images without the need for PC-based image processing. - Color Identification of Difficult-to-Distinguish Scratches

Scattered light is captured as color information, allowing for the identification of even minute defects. It also enables the differentiation of surface characteristics such as roughness by color.

Thus, the Surface Flaw Detection Scope makes the appearance inspection of optical and mechanical parts, which are composed of glossy and flat surfaces and were traditionally challenging to detect, stable and straightforward.

Technical details and advantages of the innovation

To conduct a visual inspection using machine vision, at least lighting, lenses, cameras, and a PC are necessary. Each of these components has the following functions:

- Lighting: Serves as the light source that illuminates the object and enables image capture.

- Lens: Gathers light reflected from the object's surface and focuses it onto the camera's image sensor.

- Camera: Converts the intensity of light focused on the image sensor into electrical signals and outputs them as image signals.

- PC: Displays the image signals input from the camera on a monitor screen, processes the images, and based on the inspection results, instructs the inspection system to perform actions.

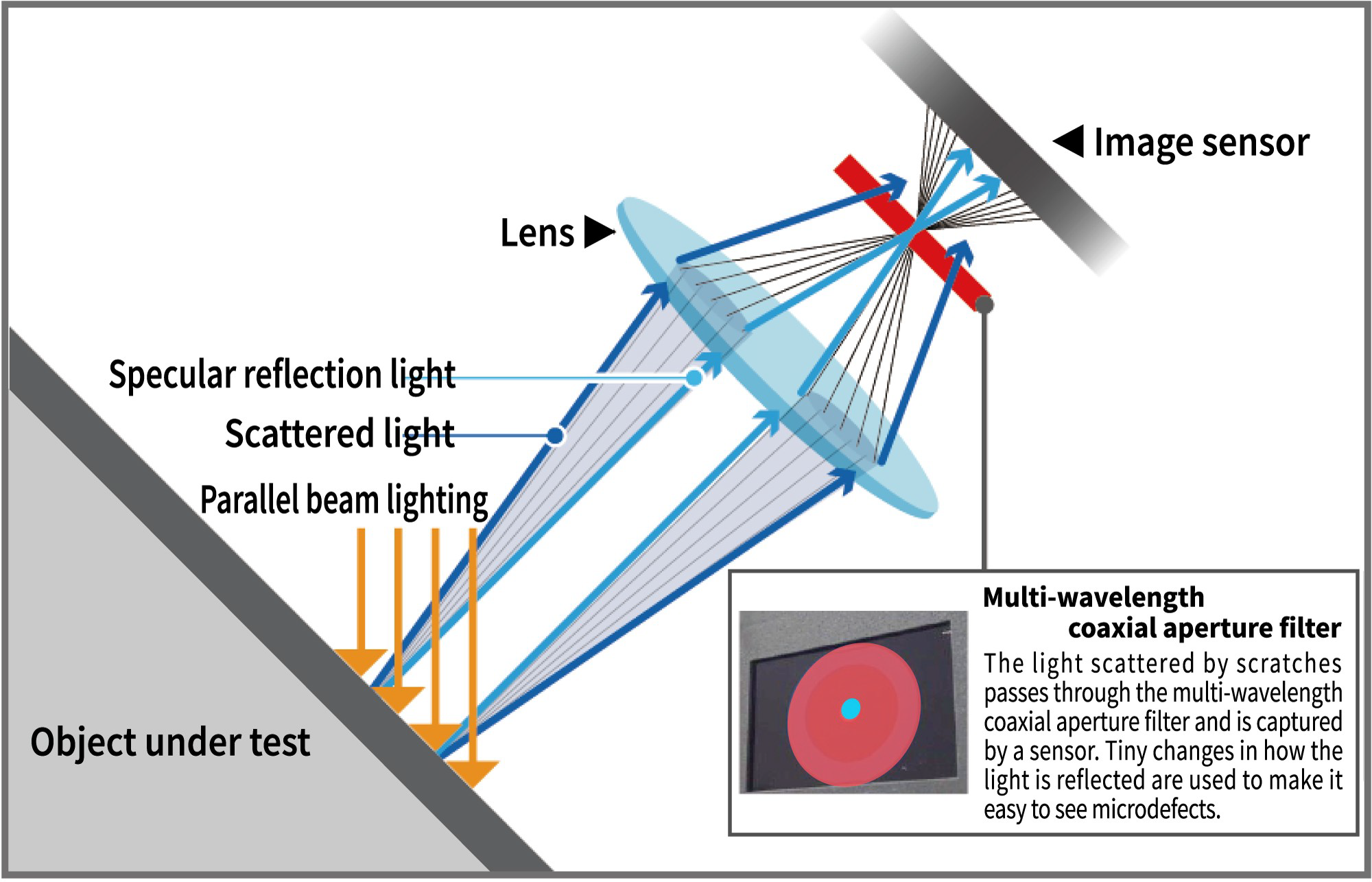

Here, the reflection state of the illumination light on the object's surface can be broadly classified into specular reflection light and scattered light, depending on the presence of scratches, foreign objects, and the surface roughness of the object. This state is represented by the Bidirectional Reflectance Distribution Function (BRDF), which describes the angular distribution characteristics of reflected light when light is incident from a certain angle.

On a plane with a glossy finish similar to a mirror, the incident illumination light will reflect specularly. In contrast, if there are scratches or foreign objects on the surface, the incident light will scatter at those points, resulting in partial scattered light. Similarly, on a machined surface with greater surface roughness than a mirror, the marks left by the cutting tool will cause the illumination light to scatter like scratches and foreign objects.

The special optical technology "OneShotBRDF(TM)" used in the surface flaw detection scope "SFD240305A" is a technology patented by Toshiba corporation. It consists of a special optical filter called a "multi-wavelength coaxial aperture" placed between the lens and the camera, and an

illumination device suitable for this technology. This function can be realized solely by the light-receiving equipment, and no special lighting apparatus is necessary.

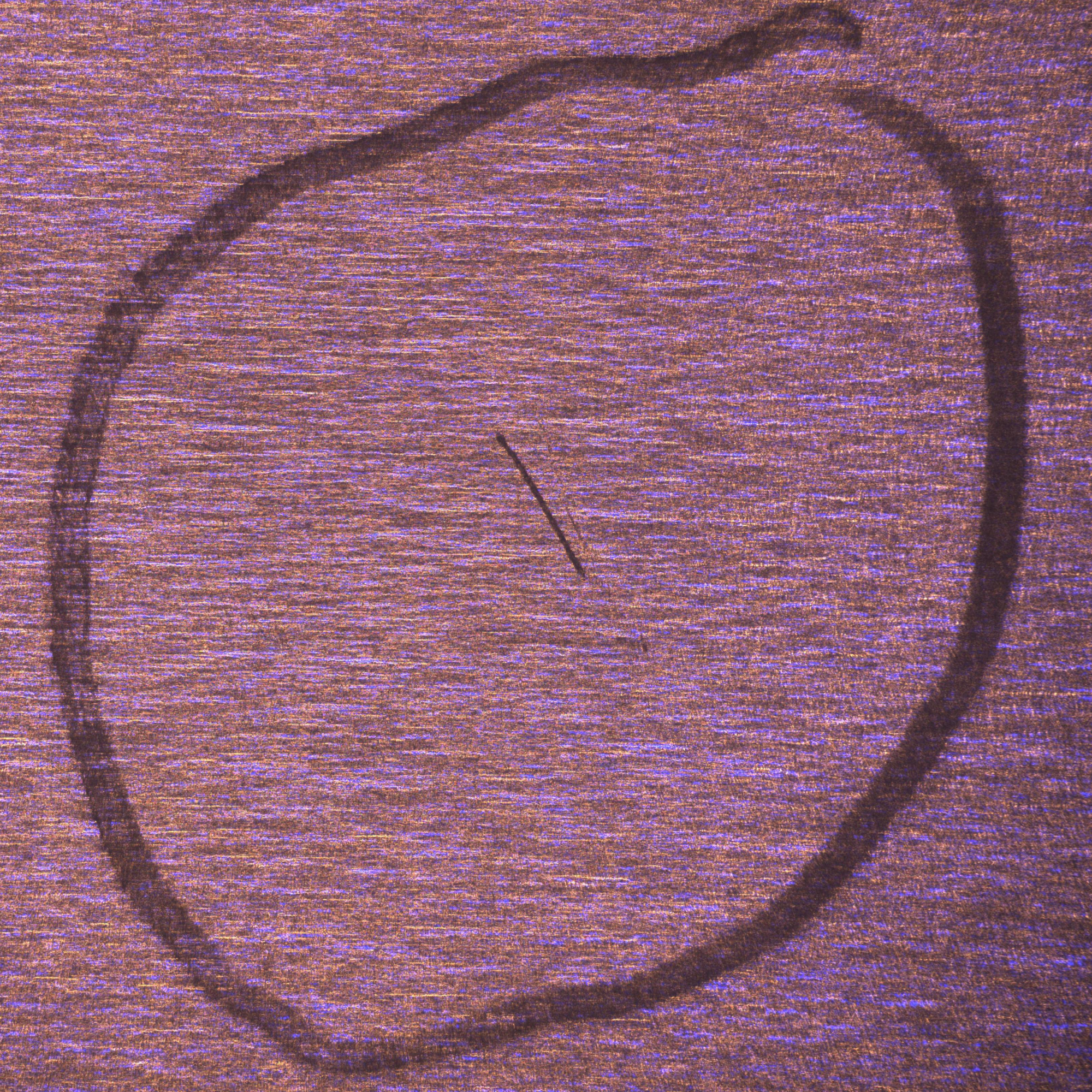

This technology separates the specular reflection light and scattered light by color. With this technology, specular reflection light on the object's surface is separated into blue, while scattered light caused by defects or foreign objects is separated into red. As a result, on objects with a glossy finish close to a mirror, only the scratches and defects will be clearly displayed in red against a blue background.

For objects with a rougher surface and less specular reflection light, blue and red will be displayed in a balance corresponding to the degree of surface roughness.

Thus, the OneShotBRDF technology allows for coloring scratches and defects using purely optical methods without the need for post-processing by a PC. This reduces the time required for image processing on the PC and facilitates visual inspection simply by displaying on a monitor screen. (OneShotBRDF is a trademark or registered trademark of Toshiba Information Systems (Japan) Corporation.)

Relevance and application possibilities of the described innovation for the machine vision industry

Machine vision utilizing industrial cameras and lighting equipment is already employed for the visual inspection of defects and imperfections in mechanical parts. However, for parts with flat and mirror-like glossy surfaces and glass-like transparent surface, such as optical filters and polished metal, challenges arise due to noise from light source reflections in captured images, difficulty in detecting minute defects, and variability in defect visibility depending on the observation angle. Consequently, automation of visual inspections for such parts is challenging, and inspections are generally conducted visually. This results in variability between inspectors and a lack of objectivity in inspection records. The Surface Flaw Detection Scope, designed for glossy and flat surfaces, separates and displays defects and normal areas by color based on the reflection of light on the object's surface. This feature not only facilitates visual observation on a monitor but also enhances compatibility with image processing, enabling automated inspections through machine vision that were previously difficult, thus contributing to quality stabilization. Additionally, the ability to record images allows for the retention of objective inspection records.

The Surface Flaw Detection Scope "SFD240305A" can detect minute defects as small as approximately 10 micrometers, and depending on the severity, even smaller defects may be detectable. With a field of view of approximately ?40 mm, it can capture a wide area in a single shot, expanding the field of view compared to traditional microscopic inspections and increasing inspection efficiency. Furthermore, by mounting it on an XY directional movable stage or a robotic arm, it can accommodate the inspection of larger areas.

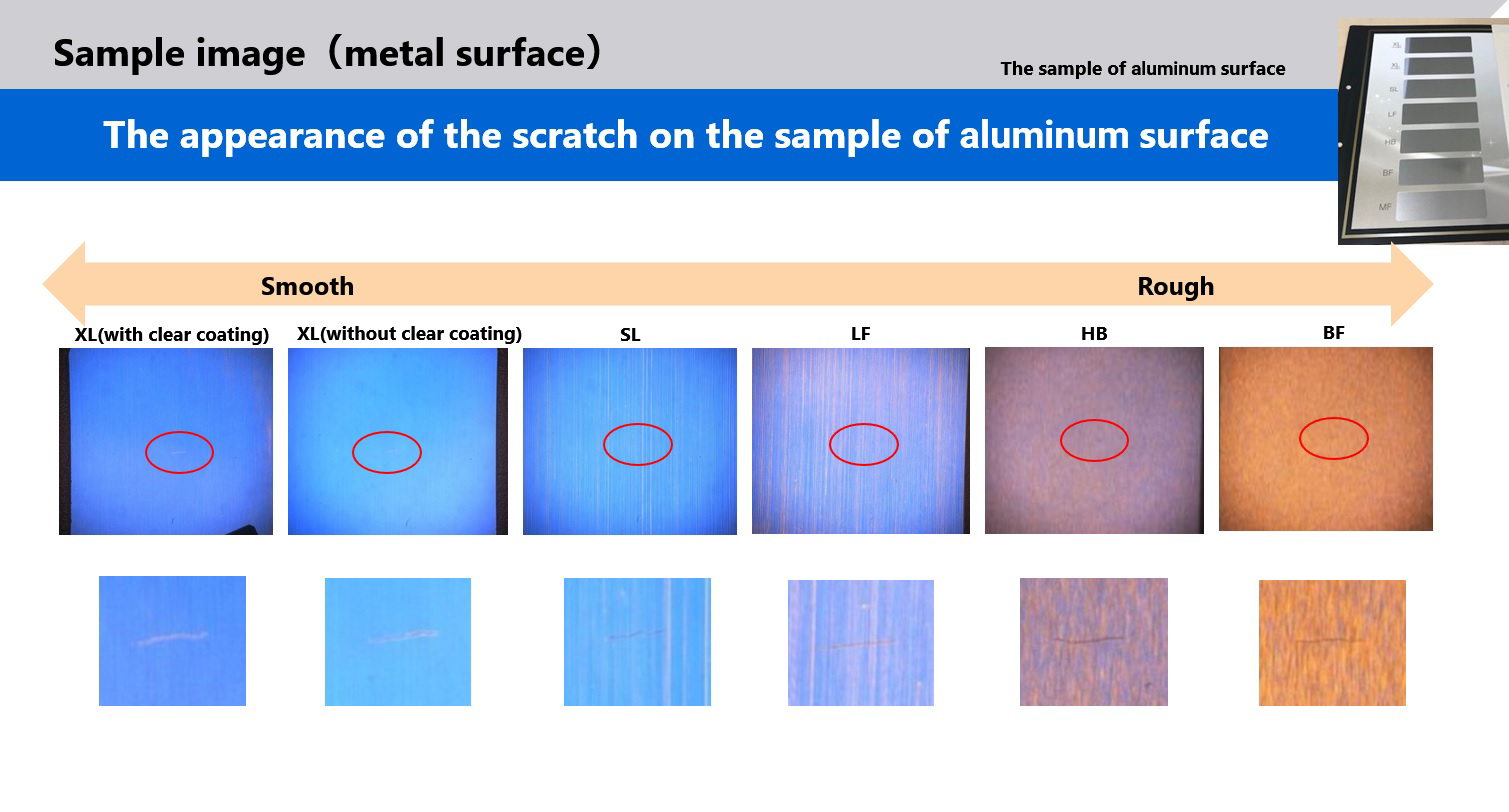

The Surface Flaw Detection Scope colors the defect-free normal areas in blue and the defective parts in red, based on the reflection of light on the surface of the inspection object. As mentioned earlier, this enables easy identification of defects on the monitor screen during visual observation and produces images advantageous for image processing. For non-mirror-like machined surfaces, the principle of color separation allows for the identification of surface roughness levels by color differences, making it also suitable for evaluating surface finishing states .

As an application of the OneShotBRDF technology, the use of multicolored special filters allows for the quantitative analysis of the inclination angle of reflected light by color, and its use as a three-dimensional camera is also anticipated.

In this way, the Surface Flaw Detection Scope "SFD240305A" has enabled visual inspections that were difficult with conventional machine vision. As a product specialized for the inspection of glossy and flat parts, it can be utilized for inspecting various flat optical filters and hermetic components such as valves.

Unique Selling Point (USP)

- OneShotBRDF(TM) Technology: Utilizes Toshiba's patented "OneShotBRDF(TM)" for one-shot flaw detection on flat surfaces.

- High-Speed Inspection: Features a multi-wavelength coaxial aperture filter for rapid scanning via scattered light discrimination.

- Color Highlighting: Enhances visibility of microdefects by highlighting scratches in different colors.

When will the innovation be available?

readily available